This article explains about media architecture in Android platform and thereby explains the steps to add your own vendor specific hardware encoder/decoder to the Android framework as OpenMAX components.

What is a codec?

A codec is hardware device or a computer program which process input data into output data. A codec that encodes a data stream or a signal for transmission and storage, possibly in encrypted form is called encoder, and the decoder function reverses the encoding for playback or editing. Codecs are widely used in applications such as video conferencing, streaming media, and video editing applications. Following are the few different types of video codec : H.264 Encoder and Decoder, MPEG Encoder and Decoder, MJPEG Encoder and Decoder.

Mediacodec in Android

Android includes Stagefright, a media playback engine at the native level that has built-in software-based codecs for popular media formats. Stagefright audio and video playback features include integration with OpenMAX codecs, session management, time-synchronized rendering, transport control and DRM (known as Digital Restrictions Management or Digital Rights Management). Stagefright also supports integration of custom hardware codec provided by device vendor.

Architecture

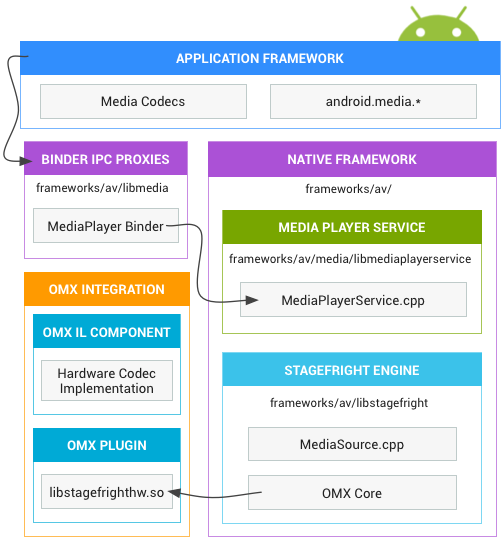

Below block diagram shows the media architecture in Android.

As you see from the above image, media architecture mainly consists of below classes and components.

- MediaCodec Class

- Media player Service

- Stagefright Engine

- OMX Components.

Stagefright Engine and MediaPlayer Service

At the native level, Android provides a multimedia framework that utilizes the Stagefright engine for audio and video recording and playback. Stagefright comes with a default list of supported software codecs and you can implement your own hardware codec by using the OpenMax integration layer standard. For more implementation details, see the MediaPlayer and Stagefright components located in below AOSP path.

frameworks/av/media/libmedia

frameworks/av/media/libmediaplayerservice

frameworks/av/media/libstagefright

MediaCodec Class

MediaCodec class can be used to access low-level media codec, i.e. encoder/decoder components.The MediaCodec class first became available in Android 4.1 (API 16). The main purpose of mediacodec class is to access the underlying hardware and software codec in the device. So if want to use your custom hardware/software codec in your android application, you need to register your codec to Android Framework. This can be done by below step.

Steps to register your codec in Android

In Android framework, the codecs are registered through media_codecs.xml. Below is an example encoder and decoder block from media_codec.xml file.

<MediaCodecs>

<Encoders>

<!-- video Decoder -->

<MediaCodec name="OMX.qcom.video.encoder.h263" type="video/3gpp" >

<Quirk name="requires-allocate-on-input-ports" />

<Quirk name="requires-allocate-on-output-ports"/>

<Quirk name="requires-loaded-to-idle-after-allocation"/>

</MediaCodec>

</Encoders>

<Decoders>

<!-- video Decoder -->

<MediaCodec name="OMX.qcom.video.decoder.mpeg4" type="video/mp4v-es" >

<Quirk name="requires-allocate-on-input-ports" />

<Quirk name="requires-allocate-on-output-ports"/>

<Quirk name="defers-output-buffer-allocation"/>

</MediaCodec>

</Decoders>

</MediaCodecs>

In standard android distribution, an example media_codecs.xml can be found here. The Stagefright service parses the system/etc/media_codecs.xml and system/etc/media_profiles.xml to expose the supported codecs and profiles on the device to application via the android.media.MediaCodecList and android.media.CamcorderProfile classes. So you need to create/edit your own media_codec.xml file and copy this over to the system image’s system/etc directory during your AOSP build.

Example

To register your video codec, you would have to add a new entry under Decoders list. In case of encoder add a new entry under encoder list. To ensure that your codec is always picked up, please ensure that your codec is listed as the first entry for the specific MIME type. Below are the examples for H.264 encoder and decoder in the media_codecs.xml file.

An Example H.264 encoder,

</encoders>

<MediaCodec name="OMX.vendor-name.h264.encode" type="video/avc" >

<Limit name="size" min="48x48" max="3840x2176" />

<Limit name="bitrate" range="1-50000000" />

</MediaCodec>

</encoders>

An example H.264 decoder,

</Decoders>

<MediaCodec name="OMX.vendor-name.h264.decoder" type="video/avc" >

<Limit name="size" min="32x32" max="3840x2176" />

<Limit name="bitrate" range="1- 62500000" />

</MediaCodec"bitrate" range="1-50000000" />

</MediaCodec>

</Decoders>

Where, OMX.vendor-name.H264.encoder and OMX.vendor-name.H264.Decoder is the name of your component. video/avc is the MIME type of your component. In this example, it denotes a AVC / H.264 video. The next 2 statements denote the quirks or special requirements of your codec. Now you have just registered your custom codec to the android framework. ifile. The next step is to integrate your codec in the OMX layer.

OMX component

What is OpenMAX(OMX) ?

OpenMAX(Open Media Acceleration) is a royalty-free, cross platform (C language programming interface) open standard for accelerating the capture, and presentation of audio, video, and images in multimedia applications on embedded and mobile devices. OpenMAX is managed by the non-profit technology consortium Khronos Group. OpenMAX provides three layers of interfaces:

- Application layer (AL)

- Integration layer (IL)

- Development layer (DL)

Application layer (AL)

OpenMAX AL is the interface between multimedia applications, such as a media player, and the platform media framework. In Anroid,this layer is exposed as mediacodec class.

Integration layer (IL)

OpenMAX IL is the interface between media framework and a set of multimedia componentssuch as an audio or video codec. For example, In our case, it is StageFright or MediaCodec API on Android. In case of Windows it is DirectShow. Likewise FFmpeg or Libav on Linux, or GStreamer for cross-platform). So if you want to add any hardware codec support to Android OMAX layer, that has to be done in this layer. To do this, you must create the OMX components and an OMX plugin that hooks together your custom codecs with the Stagefright framework. For example components, one can refer an example plugin for the Galaxy Nexus, here : hardware/ti/omap4xx/libstagefrighthw.

To add your own codecs in OMAX IL layer

- Create your components according to the OpenMAX IL component standard. The component interface is located in the frameworks/native/include/media/OpenMAX/OMX_Component.h file.

- Create a OpenMAX plugin that links your components with the Stagefright service. For the interfaces to create the plugin, see frameworks/native/include/media/hardware/OMXPluginBase.h and HardwareAPI.h header files.

- Build your plugin as a shared library with the name libstagefrighthw.so in your product Makefile. This library will be placed at /system/lib in your end system. This library will expose a createOMXPlugin symbol which will be looked by dlsym.

- OMXMaster invokes addVendorPlugin which internally invokes addPlugin(“libstagefrighthw.so”). In addPlugin, the createOMXPlugin will be looked up using which the other function pointers for makeComponentInstance, destroyComponentInstance etc are initialized.

Note:

In some cases, this “libstagefrighthw.so” library will be given as pre-built binaries by your codec vendor, since it may contain proprietary information . In such a case, first two steps will be implemented by your device vendor.In such a case, So you just need to ensure you declare the module as a product package.

Development layer (DL)

OpenMAX DL is the interface between physical hardware, such as digital signal processor (DSP) chips, CPUs, GPUs, and software, like video codecs and 3D engines. It allows codec/chip vendors to easily integrate new hardware that supports OpenMAX DL without optimising their low level software.

OpenMAX core implementation in Android:

One can refer OpenMAX implemenation in Android from the below details.

- Source Code : /AOSP/frameworks/av/media/libstagefright/omx

- Include Files : /AOSP/frameworks/native/include/media/openmax

- Shared lib name : libstagefright_omx.so

Changes to libstagefright

- In some cases, you may need to add MIME type supported by your hardware codec component to stagefright engine. This tells the top level application framework about the new supported MIME type by your stagefright engine.

- The support has to added in Acodec.cpp file in frameworks/av/media/libstagefright. For example, if your MIME is not listed in the “MimeToRole” and “VideoCodingMapEntry” structure, you need to include it there. For example, you can refer “MEDIA_MIMETYPE_VIDEO_H263” to see how it is registered with libstagefright.

struct MimeToRole {

const char *mime;

const char *decoderRole;

const char *encoderRole;

};

References

- https://source.android.com/devices/media/

- https://www.khronos.org/files/openmax-al-1-1-quick-reference.pdf

- https://www.khronos.org/openmax/

Portions of this page are reproduced from work created and shared by the Android Open Source Project and used according to terms described in the Creative Commons 2.5 Attribution License.