This article highlights Android audio architecture, audio frameworks, role of audioflinger, audio HAL and its implementation details.

What is Android Audio Architecture?

In general, it is Audio signal processing which involves lot of operations and provide various functionalities such as playing music, recording microphone audio,real-time voice communication, adding audio effects such as echo cancellation, noise suppression etc. The audio architecture in android has to take care of many aspects such as supporting more hardware such as HDMI, earpiece, headset, speaker, mic, Bluetooth SCO, A2DP ectc, relat-time requirements and supporting different software like media player/recoder, VIOP application, SIP application and phone calls.

OS Details

Directly applies for Android 5.1 and lower; May require few changes for Android 6.0 and Android 7.0.

Overview:

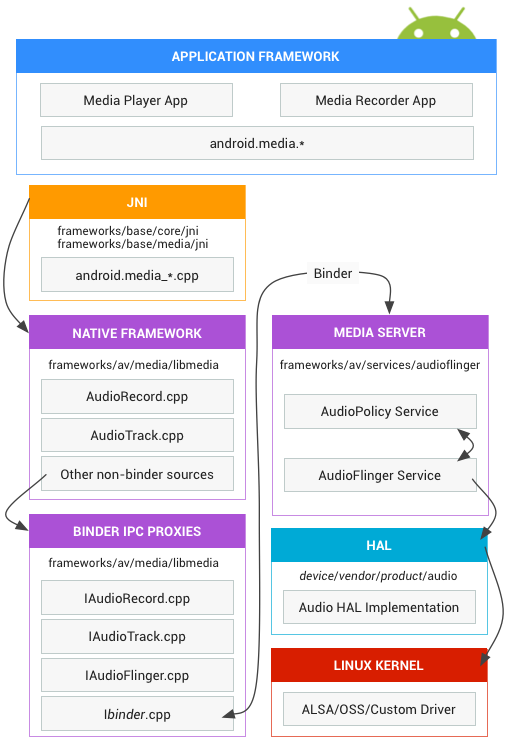

The below block diagram shows the audio architecture in Android.

Before we are going into platform specific details, let us see few basics of audio and its PCM terminologies which will be useful when developing a audio HAL for ALSA based audio devices.

Basics of Audio

An audio signal is a representation of sound, typically as an electrical voltage. Audio signals have frequencies in the audio frequency range of roughly 20 to 20,000 Hz (the limits of human hearing). Audio signals are two basic types:

- Analog : Analog refers to audio recorded using methods that replicate the original sound waves. Example: Human speech, sound from musical

- Digital : Digital audio is recorded by taking samples of the original sound wave at a specified rate. Example : CDs, Mp3 files

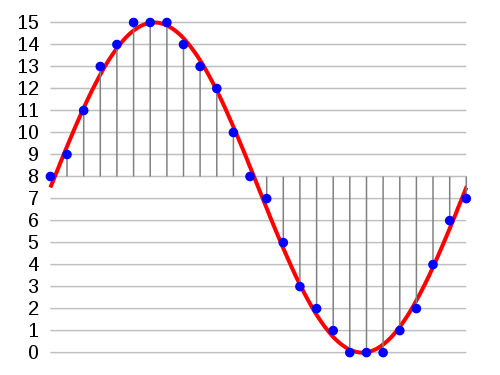

Conversion of Analog audio signals to digital audio is usually done by Pulse-code modulation (PCM) . In the below image an analog sine wave is sampled and quantized for PCM and this process is called as modulation or analog-to-digital conversion (ADC). The reverse process is called demodulation or Digital-to-analog conversion (DAC). Here are few PCM Terminology and Concepts that are used in any audio device drivers. Samples: In PCM audio, both input or output are represented as samples . A single sample represents the amplitude of sound at a certain point in time. To represent a actual audio signal a lot of individual samples are needed. For example,in a DVD audio 44100 samples are taken every second. similarly for VOIP/SIP/telephone it is 8000 samples/sec and in CD it is 44100 samples/sec.

Channels: An audio channel is an audio signal communications channel in a storage device, used in operations such as multi-track recording and sound reinforcement. Channels are generally classified into two types. They are,

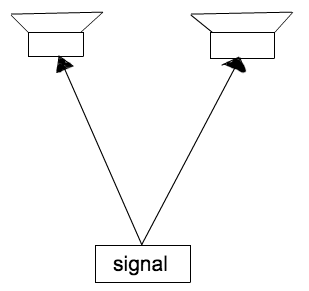

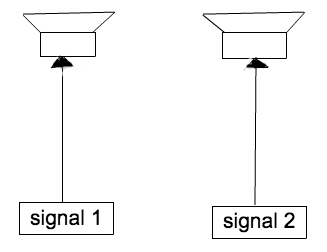

- MONO channel : It uses only one single signal to feed all the speaker.

- STEREO channels: It uses more than one (usually two) signals to feed different speakers with different signals.

Frame: A frame represents exactly one sample. In case of mono channel sound, a frame is consists of single sample. Whereas in stereo(2 channel), each frame consists of two samples.

Frame size: Frame size is the size in bytes of each frame. This too vary based on number of channels. For example,in mono, the frame size is one byte (8 bits ).

Sample Rate: Sample Rate or simply “Rate” is defined as the number of samples per second. PCM sound consists of a flow of sound frames.

Data rate: Date rate is the number of bytes, which must be recorded or provided per second at a given frame size and rate.

Period: Period is the time interval between each processing frames to the hardware.

Period size: This is the size of each period in Hz. These concepts are very much useful when you working on Audio HAL for different hardware devices such HDMI audio , Bluetooth SCO audio devices etc.

Audio Architecture

Android audio architecture defines how audio functionality is implemented and points to the relevant source code involved in the implementation.

Application framework

The application framework includes the app code, which uses the android.media APIs to interact with audio hardware. For example, media player application, voice recorder application, SIP call application etc are the applications which are going to use audio hardware.

JNI

The JNI code associated with android.media calls lower level native code to access audio hardware. JNI is located in the below path.

- frameworks/base/core/jni/

- frameworks/base/media/jni/

Native framework

The native framework provides a native equivalent to the android.media package, calling Binder IPC proxies to access the audio-specific services of the media server. Native framework code is located in

- Frameworks/av/media/libmedia

Binder IPC

Binder IPC proxies facilitate communication over process boundaries. Proxies are located in frameworks/av/media/libmedia and begin with the letter “I”.

Android Audio Manager

Audio policy service is a service responsible for all actions that require a policy decision to be made first, such as opening a new I/O audio stream, re-routing after a change, and stream volume management. This audio policy source code is located in the below path.

- frameworks/av/services/audiopolicy

The main roles of audio policy service are,

-

keep track of current system state (removable device connections, phone state, user requests etc.,

-

System state changes and user actions are notified to audio policy manager with methods of the audio_policy.

This service loads the audio_policy.conf file from system/etc/ during start which is used to declare the audio devices present on your product. Below is an example of audio_policy.conf file with different supported devices.

global_configuration {

attached_output_devices AUDIO_DEVICE_OUT_AUX_DIGITAL|AUDIO_DEVICE_OUT_SPEAKER

default_output_device AUDIO_DEVICE_OUT_AUX_DIGITAL

attached_input_devices AUDIO_DEVICE_IN_BUILTIN_MIC|AUDIO_DEVICE_IN_REMOTE_SUBMIX

}

audio_hw_modules {

primary {

outputs {

primary {

sampling_rates 48000

channel_masks AUDIO_CHANNEL_OUT_STEREO

formats AUDIO_FORMAT_PCM_16_BIT

devices AUDIO_DEVICE_OUT_SPEAKER|AUDIO_DEVICE_OUT_WIRED_HEADSET|AUDIO_DEVICE_OUT_WIRED_HEADPHONE|AUDIO_DEVICE_OUT_AUX_DIGITAL|AUDIO_DEVICE_OUT_ALL_SCO

flags AUDIO_OUTPUT_FLAG_PRIMARY

}

hdmi {

sampling_rates dynamic

channel_masks dynamic

formats AUDIO_FORMAT_PCM_16_BIT

devices AUDIO_DEVICE_OUT_AUX_DIGITAL

flags AUDIO_OUTPUT_FLAG_DIRECT

}

}

inputs {

primary {

sampling_rates 8000|11025|16000|22050|24000|32000|44100|48000

channel_masks AUDIO_CHANNEL_IN_MONO|AUDIO_CHANNEL_IN_STEREO

formats AUDIO_FORMAT_PCM_16_BIT

devices AUDIO_DEVICE_IN_USB_DEVICE|AUDIO_DEVICE_IN_BUILTIN_MIC|AUDIO_DEVICE_IN_ALL_SCO

}

}

}

}

From Android 7.0, ASOP introduces a new audio policy configuration file format (XML) for describing the audio topology. Refer this link [audio_config.xml] (https://android.googlesource.com/device/google/dragon/+/nougat-dev/audio_policy_configuration.xml) file for example XML format config file. Note: Android 7.0 preserves support for using audio_policy.conf; this legacy format is used by default. To use the XML file format, include the build option USE_XML_AUDIO_POLICY_CONF := 1 in device makefile.

Media server The Audio Flinger

The media server contains audio services, which are the actual code that interacts with your HAL implementations. The media server is located in the below path.

- frameworks/av/services/audioflinger

This media server service can be launched in init.rc file using below command.

service media /system/bin/mediaserver

The Audio HAL

Android’s audio Hardware Abstraction Layer (HAL) connects the higher-level, audio-specific framework APIs in android.media to the underlying audio driver and hardware.The audio HAL interfaces are located inhardware/libhardware/include/hardware. This section explains how to implement the audio Hardware Abstraction Layer (HAL) for different hard wares.The audio HAL mainly contain two interfaces,

- hardware/libhardware/include/hardware/audio.h. Represents the main functions of an audio device.

- hardware/libhardware/include/hardware/audio_effect.h. Represents effects that can be applied to audio such as downmixing, echo cancellation, or noise suppression.

Device vendor must implement all interfaces to support audio HAL. Audio Hardware mainly contains basic functionality for:

- Opening/closing audio input/output streams

- Audio devices enabling/disabling, like EARPIECE, SPEAKER, and BLUETOOTH_SCO, etc.

- Set volume for voice call and Mute Mic.

- Change audio modes including AUDIO_MODE_NORMAL for standard audio playback, AUDIO_MODE_IN_CALL when a call is in progress, AUDIO_MODE_RINGTONE when a ringtone is playing.

Each input/output stream should provide function for

- data write/read.

- Get/set Sample rate.

- Get channels, buffer size information of current stream.

- Get/set current format, eg. AUDIO_FORMAT_PCM_16_BIT.

- Put stream into standby mode.

- Add/remove audio effect on the stream.

- Get/set parameter to switch audio path.

The audio HAL should implement all the below represented function callbacks.

static int adev_open(const hw_module_t* module, const char* name,

hw_device_t** device)

{

struct audio_device *adev;

int ret = 0;

int i,j,k;

bool found;

if (strcmp(name, AUDIO_HARDWARE_INTERFACE) != 0)

return -EINVAL;

adev = calloc(1, sizeof(struct udio_device));

if (!adev)

return -ENOMEM;

/* Set HAL API Version*/

adev->hw_device.common.tag = HARDWARE_DEVICE_TAG;

adev->hw_device.common.version = AUDIO_DEVICE_API_VERSION_2_0;

adev->hw_device.common.module = (struct hw_module_t *) module;

adev->hw_device.common.close = adev_close;

/* Function callbacks*/

adev->hw_device.init_check = adev_init_check;

adev->hw_device.set_voice_volume = adev_set_voice_volume;

adev->hw_device.set_master_volume = adev_set_master_volume;

adev->hw_device.set_mode = adev_set_mode;

adev->hw_device.set_mic_mute = adev_set_mic_mute;

adev->hw_device.get_mic_mute = adev_get_mic_mute;

adev->hw_device.set_parameters = adev_set_parameters;

adev->hw_device.get_parameters = adev_get_parameters;

adev->hw_device.get_input_buffer_size = adev_get_input_buffer_size;

adev->hw_device.open_output_stream = adev_open_output_stream;

adev->hw_device.close_output_stream = adev_close_output_stream;

adev->hw_device.open_input_stream = adev_open_input_stream;

adev->hw_device.close_input_stream = adev_close_input_stream;

adev->hw_device.dump = adev_dump;

/* Sampling rate */

adev->default_rate = adev->mm_rate;

pcm_config_mm_out.rate = adev->mm_rate;

pcm_config_mm_in.rate = adev->mm_rate;

pcm_config_hdmi_multi.rate = adev->mm_rate;

pcm_config_esai_multi.rate = adev->mm_rate;

*device = &adev->hw_device.common;

return 0;

Audio effects

The Android framework supports audio effects such as Acoustic Echo Cancellation (AEC), Noise Suppression (NS) andAutomatic Gain Control (AGC). This effect can be added in the preprocessing library. The audio HAL needs to support this effect.

Pre-processing effects

The Android platform provides audio effects in the audiofx package, which is available for developers to access.The supported pre-processing effects are,

- Acoustic Echo Cancellation

- Automatic Gain Control

- Noise Suppression

This effects work only, if hardware vendors of the device supported them in Audio HAL. These effects for audio source is applied based in the /system/etc/audio_effects.conf file. If user wants to add any specifc effects for their device, then create a vendor spefic config file in /system/vendor/etc/audio_effects.conf with the required effects turned on. The following example shows the audio_effects.conf file with pre-processing effects enabled.

ibraries {

bundle {

path /system/lib/soundfx/libbundlewrapper.so

}

reverb {

path /system/lib/soundfx/libreverbwrapper.so

}

visualizer {

path /system/lib/soundfx/libvisualizer.so

}

downmix {

path /system/lib/soundfx/libdownmix.so

}

pre_processing {

path /system/lib/soundfx/libaudiopreprocessing.so

}

}

effects {

agc {

library pre_processing

uuid aa8130e0-66fc-11e0-bad0-0002a5d5c51b

}

aec {

library pre_processing

uuid bb392ec0-8d4d-11e0-a896-0002a5d5c51b

}

ns {

library pre_processing

uuid c06c8400-8e06-11e0-9cb6-0002a5d5c51b

}

}

pre_processing {

voice_communication {

ns {

}

agc {

}

aec {

}

}

}

Portions of this page are reproduced from work created and shared by the Android Open Source Project and used according to terms described in the Creative Commons 2.5 Attribution License.